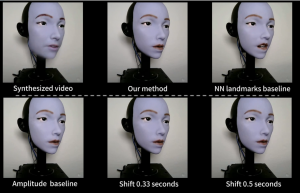

A nearly human robot

The facial movements of robots with humanoid faces have always faced a challenge: synchronizing lips and audio to appear human. Lip movement is crucial in human communication because it captures our attention. Therefore, the common desynchronization of lips and audio in robots reveals their lack of life.

The facial movements of robots with humanoid faces have always faced a challenge: synchronizing lips and audio to appear human. Lip movement is crucial in human communication because it captures our attention. Therefore, the common desynchronization of lips and audio in robots reveals their lack of life.

A group of researchers from the Department of Computer Science at Columbia University has unveiled a humanoid robotic face with soft silicone lips actuated by a 10-degree-of-freedom mechanism. Until now, robotic lips have typically lacked the mechanical complexity necessary to reproduce mouth movements. Furthermore, existing synchronization methods rely on manual controls, limiting their realism. This research group has used a self-supervised learning process based on a variational autoencoder (VAE), combined with a facial action transformer, enabling the robot to autonomously infer more realistic lip trajectories directly from speech audio.

Furthermore, the learned synchronization successfully generalizes across multiple linguistic contexts, enabling the robot to articulate speech in 10 languages not encountered during training. The robot acquired this ability through observational learning, rather than by following rules. It first learned to use its 26 facial motors by observing its own reflection in the mirror, before learning to mimic human lip movements by watching hours of YouTube videos. As this is a learned skill, it will continue to improve with human interaction.